Measuring training effectiveness is one of the many responsibilities for learning and development professionals and one of the many priorities for senior leadership in workplaces. According to Statista Research Department, every year, U.S businesses collectively invest more than $80 billion on training their employees, and global spending on training and development has increased by 400% in 11 years. This investment cost emphasizes the importance of measuring training effectiveness and business impact. Also, as organizations provide more training offerings to upskill and reskill their employees, learning, and development professionals are hungry for guidance on creating and demonstrating the value of training to their organizations. The Kirkpatrick model is no secret to effectively evaluating training programs; however, most professionals get stuck in implementing the model’s levels 3 (behavior) and 4 (results). Thus, it is no wonder that learning and development professionals seek other methods or creative strategies to evaluate the success of training programs. This article will explore effective evaluation strategies in achieving levels 3 and 4 of the Kirkpatrick Model and discuss Brinkerhoff’s Success Case Method as an alternative approach to evaluating training. Depending on your evaluation goals, one or more of these solutions could provide structure in evaluating training at your organization.

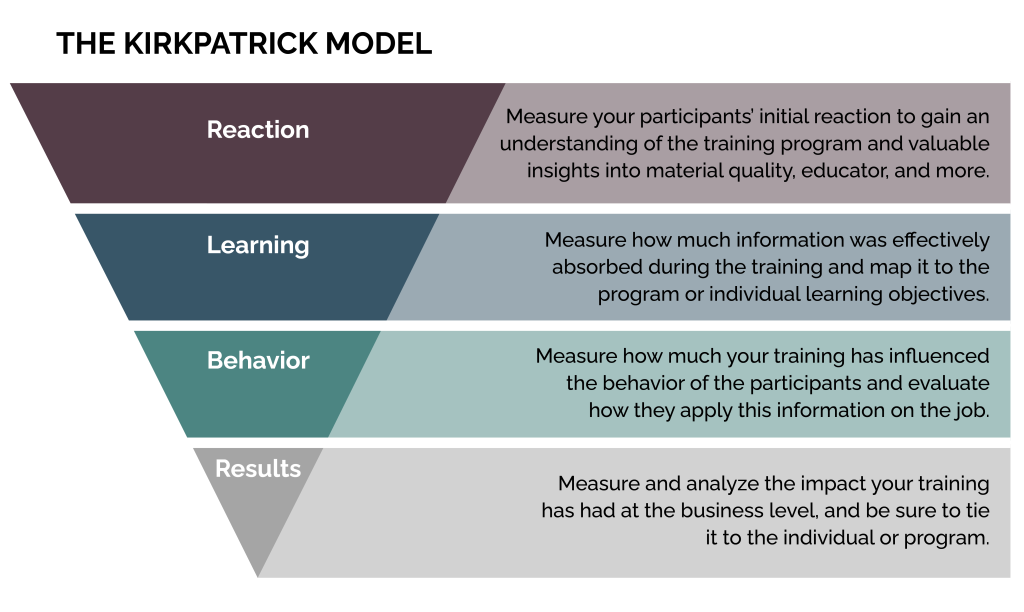

Learning and development professionals have embraced the Kirkpatrick model and continue to adopt it as the standard approach for evaluating training programs. The evaluation model dates back to 1959 when published in the Journal of the American Society of Training Directors that outlines techniques for evaluating training according to four levels of evaluation. The model’s primary strength is that it is easy to understand and implement as the evaluation only includes four levels: reaction, learning, behavior, and results.

Kirkpatrick Model

Level 1

Level 1 evaluations (reaction) measure participants’ overall response to the training program. This includes asking participants how good, engaging, and relevant the training content is to their jobs. Level 1 is considered simple and is typically achieved by implementing a formative evaluation in a survey immediately following training.

Level 2

Level 2 evaluations (learning) measure the increase in participants’ knowledge due to the instruction during training. In level 2, it is common to assess learning using knowledge checks, discussion questions, role-play, simulations, and focus group interviews.

Level 3

Level 3 (behavior) aims to measure participants’ on-the-job changes in behavior due to the instruction. This is essential because training alone will not yield enough organizational results to be viewed as successful. However, this level is also considered somewhat difficult to evaluate as it requires measurement of knowledge transfer (hyperlink previous article on knowledge transfer).

Level 4

Then lastly, there is Level 4 (results). Level 4 is the reason training is performed. Training’s job is not complete until its contributions to business results can be demonstrated and acknowledged by stakeholders. Again, the majority of learning professionals struggle connecting training to performance and results for critical learning programs. When talking with other learning development professionals, the standard response to the difficulty in demonstrating learning results is the time required to measure and decide on a practical approach to capturing key performance indicators. Level 3 and 4 truly is the missing link in moving from learning to results so, what can organizations do to measure the impact of training behavior and the results.

Measuring Level 4 (Results) Strategies

One strategy organizations can implement in achieving desired results from training programs is to create leading indicators. Leading indicators provide personalized targets that all contribute to organizational outcomes. Consider leading indicators as little flags marching toward the finish line, which represents the desired corporate results. They also establish a connection between the performance of critical behaviors and the organization’s highest-level impact. There are two distinct types of leading indicators, internal and external, which provide quantitative and qualitative data. Internal leading indicators arise from within the organization and are typically the first to appear. Internal leading indicators relate to production output, quality, sales, cost, safety, employee retention, or other critical outcomes for your department, group, or programs that contribute to Level 4 results. In addition to internal leading factors, external leading factors can be identified in measuring the success of a training program. For example, external leading factors relate to customer response, retention, and industry standards.

The benefit of identifying and leveraging leading indicators is they help keep your initiatives on track by serving as the last line of defense against possible failure at Level 4. In addition, monitoring leading indicators along the way give you time to identify barriers to success and apply the proper interventions before ultimate outcomes are jeopardized. Finally, leading indicators provide important data connecting training, on-the-job performance, and the highest-level result. The first step in evaluating leading indicators is to define which data you can borrow and which information you will build the tools to gather. For example, human resource metrics may already exist and can be linked to the training program/ initiative. If the data is not already available within the organization, it is crucial to define what tools to build to gather the data. Typical examples of tools that may need to be made are surveys and a structured question set for interviews and focus groups.

Alternative Approach to Kirkpatrick Model

Alternative to using the Kirkpatrick model in measuring training success, the Success Case Method (SCM) by Robert Brinkerhoff has gained much adoption across several industries. This method involves identifying the most and least successful individuals who participated in the training event. Once these individuals are identified, interviews and other ways, such as observation, can be conducted to understand the training’s effects better. In comparison, the Kirkpatrick model seeks to uncover a program’s results, while the SCM wants to discover how the program affected the most successful participants. One weakness of this model is that only small sample size (successful participants) is asked to provide feedback on the training program, which may omit valuable information and data that could have been collected if all participants were included in giving feedback. This evaluation method may be more beneficial for programs that aim to understand how participants are using the training content on the job, which may result in more qualitative data than quantitative metrics. Both evaluations have benefits and disadvantages in measuring training effectiveness, so the key is selecting the best approach for your training program or perhaps combing these two approaches.

Here at Radiant Digital, we enjoy collaborating with organizations in developing training effectiveness strategies. Partner with us and learn how we can support your learning development team.